Word2Vec vs BERT

As Natural Language Processing (NLP) methods and models continue to advance it can be overwhelming to keep up with the latest developments, let alone objectively understand the differences between state-of-the-art models.

If you’re working with natural language data, it’s important to understand how models differ and how one model might be able to overcome the shortcomings of others. In this article we’ll cover two popular models — Word2Vec and BERT — discussing some advantages and drawbacks to each.

Some background and key differences.

BERT or Bidirectional Encoder Representations from Transformers, is a technique that allows for bidirectional training of Transformers for natural language modeling tasks. Language models which are bidirectionally trained can learn deeper context from language than single-direction models. BERT generates context aware embeddings that allow for multiple representations (each representation, in this case, is a vector) of each word based on a given word’s context.

Word2Vec is a method to create word embeddings that pre-dates BERT. Word2Vec generates embeddings that are independent from context, so each word is represented as a single vector as opposed to multiple.

BERT’s bidirectional encoding strategy allows it to ingest the position of a each word in a sequence and incorporate that into that word’s embedding, while Word2Vec embeddings aren’t able to account for word position.

Embeddings

Word2Vec offers pre-trained word embeddings that anyone can use off-the-shelf. The embeddings are key: value pairs, essentially 1-1 mappings between words and their respective vectors. Word2Vec takes a single word as input and outputs a single vector representation of that word. In theory, you don’t even need the Word2Vec model - just the pre-determined embeddings.

Since BERT generates contextual embeddings, it takes as input a sequence (usually a sentence) rather than a single word. BERT needs to be shown the context that surrounding words provide before it can generate a word embedding. With BERT, you do need to have the actual model as the vector representations of words will vary based on the specific sequences you’re inputting. The output is a fixed-length vector representation of the input sentence.

Out-of-Vocabulary

Since Word2Vec learns embeddings at word level, it can only generate embeddings for words that existed in it’s training set (aka it’s “vocabulary space”). This is a major drawback to Word2Vec - that it just doesn’t support Out-of-Vocabulary words.

Alternatively, BERT learns representations at the subword level, so a BERT model will have a smaller vocabulary space than the number of unique words in its training corpus. In turn, BERT is able to generate embeddings for words outside of its vocabulary space giving it a near infinite vocabulary.

Conclusion

We covered just a few of the high level differences between Word2Vec and BERT in this article. For a deeper dive into the mechanics behind transformers, check out other posts from our Deep Learning 101 series.

What is a Transformer and Why Should I Care?

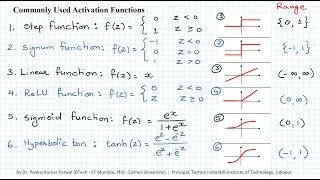

Transformer Activation Functions Explainer

For a guide through the different NLP applications using code and examples, check out these recommended titles:

Looking for a customized deep dive into this or related topics, need in depth answers or walkthroughs of how to compute or use embeddings? Send us an email to inquire about corporate trainings and workshops!